Search engine optimization (SEO) is all about making your website visible on search engines. But have you ever wondered how it happens in the first place? The answer lies in two factors: Crawling and indexability.

Many, including marketers, confuse the two and use these terms interchangeably. They do not mean the same thing. However, they work together to ensure that your pages are visible in the search results.

Here’s a quick guide to understanding both concepts.

What Are Crawlability and Indexability of a Website?

How do search engines work? Imagine the likes of Google and Bing as a massive library containing all the world’s information. A librarian’s job is to collect, organize, and make all this information accessible to anyone who needs it.

Similarly, the search engine’s job is to discover, understand, and index all the content on the Internet so that people can find it easily when needed. When they type their query, such as “salons in Las Vegas,” into the search box, they expect to see a list of relevant results in seconds.

However, for this to be possible, the search engine should first find all the web pages. That’s where web crawling comes into play.

What Is Web Crawling?

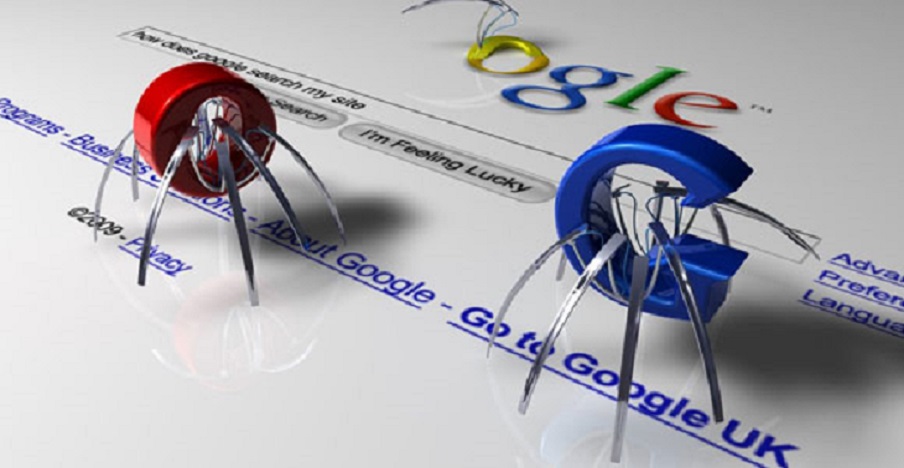

Web crawlers are also called web spiders or bots. These programs scan the Internet and visit websites to read and index their content. They also follow links on the pages and report all the information back to the search engine servers.

Crawling is how search engines discover new pages and update existing ones. Every time a spider visits a website, it will look for fresh data. If it finds any, it will add the new pages to its index.

The process of web crawling is similar to a person exploring a huge maze. The aim is to find a way out, but there are many dead ends and wrong turns along the way.

You can help search engines by focusing on your site’s crawl ability. It refers to how well and easily a crawler can access, read, and index the content on your page. Your site is considered crawlable if bots don’t encounter hiccups like broken links and pages with error codes while crawling.

What Is Indexability?

Indexability refers to how search engine bots process the content they find during a crawl. It’s the ability of a crawler to take the content it finds and adds it to the search engine’s index.

The index is where all the information collected by web crawlers is stored. When someone types a query into the search engine, it will use the database to choose all the relevant results and rank them according to their relevance and usefulness.

4 SEO Tips to Improve Crawlability and Indexability

Crawlability and indexability are two independent processes. Just because bots can crawl your page doesn’t mean they will index it and vice versa.

According to Digital Authority Partners, there are times when you must prevent spiders from indexing certain sections of your website. These can include pages with low-quality, outdated, or thin content.

Overall, you want your site to be as crawlable and indexable as possible. Here are four SEO tips to help you:

1. Enhance Your Technical SEO

If you can do only one item on the list, improve your technical SEO. Technical SEO deals with the behind-the-scenes aspects of your website that help search engine bots easily find, read, and index your content.

You can refine it in two ways:

- Create an XML sitemap. An XML sitemap is like a table of contents for your website. It contains all the links to your pages and tells search engine bots which pages are the most important. Create one using a sitemap generator tool or manually adding the code to your site’s robots.txt file. Once you have it, submit it to Google Search Console.

- Pay attention to the site architecture. How you organize your website can either help or hinder the crawling process. A good site structure makes it easy for bots to find and index all your pages. Create an effective site structure by grouping similar topics together. For example, place all your posts about keyword research under one category.

- Speed up page load time. Search engines limit the time they spend on a website. Once they reach their budget, they move on. So, the faster your pages load, the more pages the bots crawl, and the better your chances for indexing. Test page speed with Google’s PageSpeed Insights, which gives you a list of improvements needed for your pages.

2. Strengthen Your Links

Crawling is a resource-intensive process. If your website is filled with links to low-quality websites or broken pages, it will use up the crawl budget quickly without finding anything useful.

Enhance your link-building strategies with these:

- Audit your links. Know the condition of your links. Are they broken or dead? Check your link profile using a backlink checker tool and eliminate invalid ones. Fix 404 errors by using redirects or by deleting the pages entirely.

- Disavow only paid-for or unnaturally gained links. Many marketers immediately disavow spammy links, thinking they will hurt rankings. But it’s not always the best course of action to take. Save yourself the trouble by disavowing only those you know you didn’t gain naturally (e.g., you paid to get a backlink).

3. Use Robot.txt Files

Robot.txt files give search engine bots directions on which pages they can and cannot crawl. It also minimizes duplicate content.

You can create the file with a text editor like Notepad or TextEdit and then upload it to the root directory of your website (i.e., www.example.com/robots.txt).

Some common instructions you can include in your robot.txt file are:

- Sitemap. It instructs the bot where to find your XML sitemap. Include the full URL of your sitemap so it will be easier for the bot to find it.

- Allow/disallow. Use this to specify which folders or pages bots can check out. For example, if you want to hide a certain page from Google, you can add the following code: User-agent: * Disallow: /page-to-hide/

- Crawl-delay. It specifies how long the bot should wait before crawling a certain page. It is useful when you have pages that are updated frequently or if your server can’t handle too many crawls at once.

4. Create New or Update Content Regularly

One of the best ways to get search engines to visit your website (and even maintain your pages) more often is by regularly publishing new or updated content. It shows that your site is active and that you’re putting out quality information that people will want to read.

The frequency of your post depends on many factors. For instance, if you publish news articles, you might need to release several stories daily. Publishing one or two quality posts per week should be enough if you have a blog. What matters more are consistency and content quality.

Final Words

Crawling and indexing are two of the most important processes in SEO. Without them, your website will not be visible on search engine results pages.

Make it a habit to check your website’s crawlability and index capabilities regularly. This way, you can quickly identify and fix any problems.

Use the tips from this article to help improve your website’s ranking and visibility. Tap SEO agencies that can amplify your SEO efforts.

Leave a Reply